#CloudGuruChallenge: Improve application performance using Amazon ElastiCache Jun'21

Hey there!

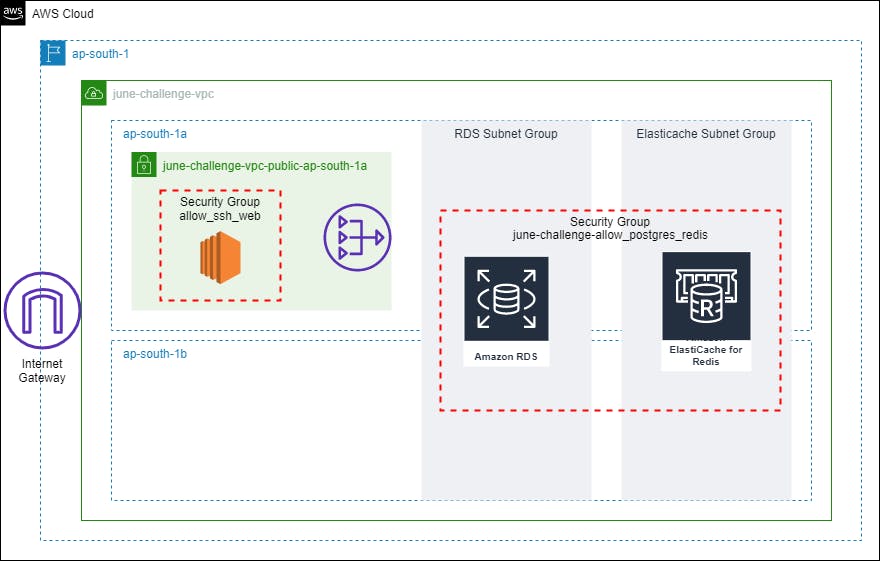

This is another #CloudGuruChallenge blog for June'21. The goal for this challenge was to Implement a Redis cluster using Amazon ElastiCache to cache database queries in a simple Python application.

I decided to use Terraform as my tool for creating Infrastructure as Code. Terraform, has a more declarative style where you write code that specifies your desired end state, and the IAC tool itself is responsible for figuring out how to achieve that state.

Here are some snippets from my terraform code for creating required resources on AWS.

- Networking: VPC, Subnets, NAT and IGW

module "vpc" {

source = "terraform-aws-modules/vpc/aws"

name = "${var.namespace}-vpc"

cidr = "10.0.0.0/16"

azs = data.aws_availability_zones.available.names

create_igw = true

public_subnets = ["10.0.101.0/24"]

database_subnets = ["10.0.21.0/24", "10.0.22.0/24"]

elasticache_subnets = ["10.0.31.0/24", "10.0.32.0/24"]

enable_nat_gateway = true

single_nat_gateway = true

reuse_nat_ips = true

external_nat_ip_ids = aws_eip.nat.*.id

create_database_subnet_group = true

create_elasticache_subnet_group = true

}

- Compute: EC2

resource "aws_instance" "ec2_public" {

ami = data.aws_ami.ubuntu-20.id

associate_public_ip_address = true

instance_type = "t2.micro"

key_name = aws_key_pair.key_pair.key_name

subnet_id = module.vpc.public_subnets[0]

vpc_security_group_ids = [aws_security_group.public_sg.id]

tags = {

"Name" = "${var.namespace}-EC2-PUBLIC"

}

}

- Database: AWS Relation Database Service with Postgres Engine

module "db" {

source = "terraform-aws-modules/rds/aws"

version = "~> 3.0"

identifier = "${var.namespace}-postgres"

engine = "postgres"

engine_version = "12.5"

family = "postgres12"

major_engine_version = "12"

instance_class = "db.t2.micro"

name = "sampledatabase"

username = "admin1"

password = "admin100"

port = 5432

allocated_storage = 20

max_allocated_storage = 100

storage_encrypted = false

multi_az = false

subnet_ids = module.vpc.database_subnets

vpc_security_group_ids = tolist([aws_security_group.private_sg.id])

publicly_accessible = false

maintenance_window = "Mon:00:00-Mon:03:00"

backup_window = "03:00-06:00"

enabled_cloudwatch_logs_exports = ["postgresql", "upgrade"]

backup_retention_period = 0

skip_final_snapshot = true

deletion_protection = false

performance_insights_enabled = false

create_monitoring_role = false

tags = {

Name = "${var.namespace}-postgres"

}

}

- Cache Memory: AWS ElastiCache with Redis

resource "aws_elasticache_cluster" "redis" {

cluster_id = "${var.namespace}-redis"

engine = "redis"

node_type = "cache.t3.micro"

num_cache_nodes = 1

subnet_group_name = module.vpc.elasticache_subnet_group_name

security_group_ids = tolist([aws_security_group.private_sg.id])

parameter_group_name = "default.redis6.x"

engine_version = "6.x"

port = 6379

}

Apart from this, security groups are also created to allow relevant traffic. An RSA private key is generated using Terraform TLS module which is used as a key-pair to access EC2 inside the public subnet. Using the TLS module is a risky option to generate keys because the key gets stored in the terraform.tfstate file in plain format. This increases the chance of the key getting compromised. I added the required terraform log, cache, and state in the gitignore file but using TLS should be avoided in production scenarios.

Terraform code outputs a command string for connecting to the EC2 instance. Key get stored locally on the system after apply terraform script. We can simply copy-paste the command string in the terminal to get connected to the instance.

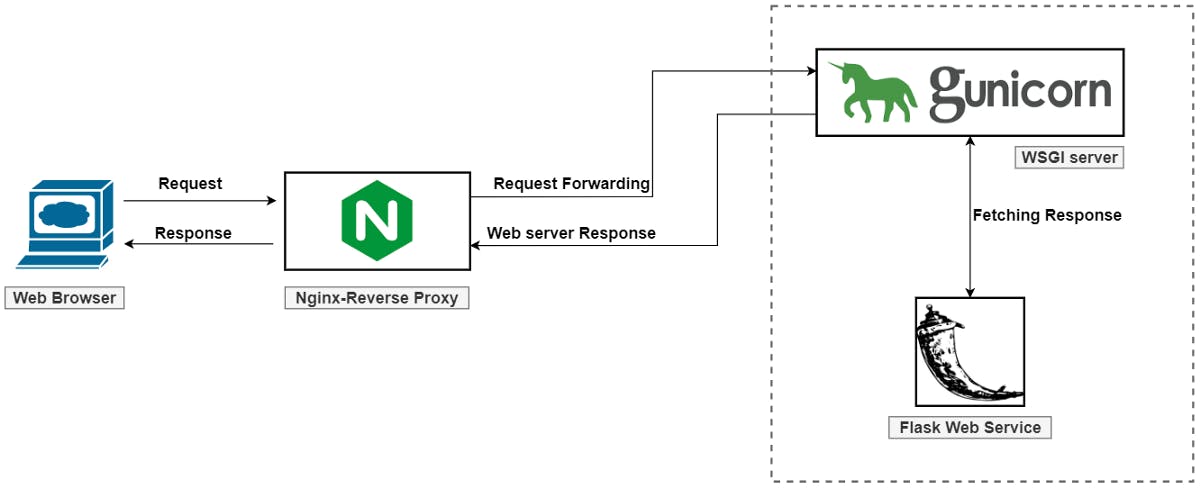

For instance, NGINX is used as a reverse proxy server. The default flask application server is not suitable for running on servers, hence Gunicorn (a Python WSGI HTTP Server for UNIX) is used to run the flask application. This will work fine until EC2 gets rebooted for some reason. To avoid this, create a gunicron service and add it to boot manager. This can be done by creating a gunicorn.service file in the /etc/systemd/system folder.

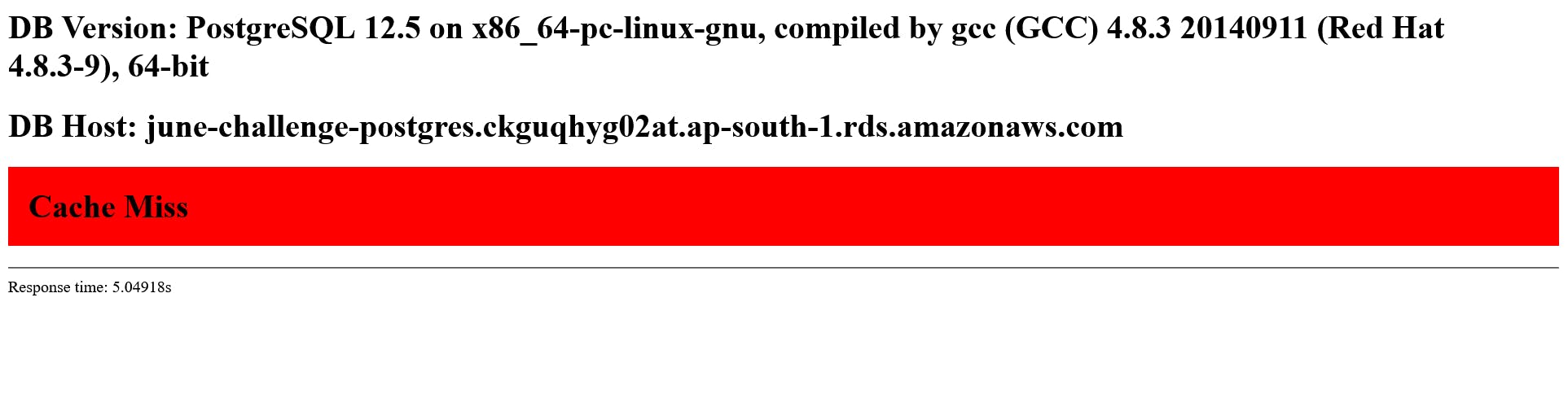

In case of a cache miss, the response time is slightly above 5 seconds. This is due to the function which was created to artificially slow down the Postgres query.

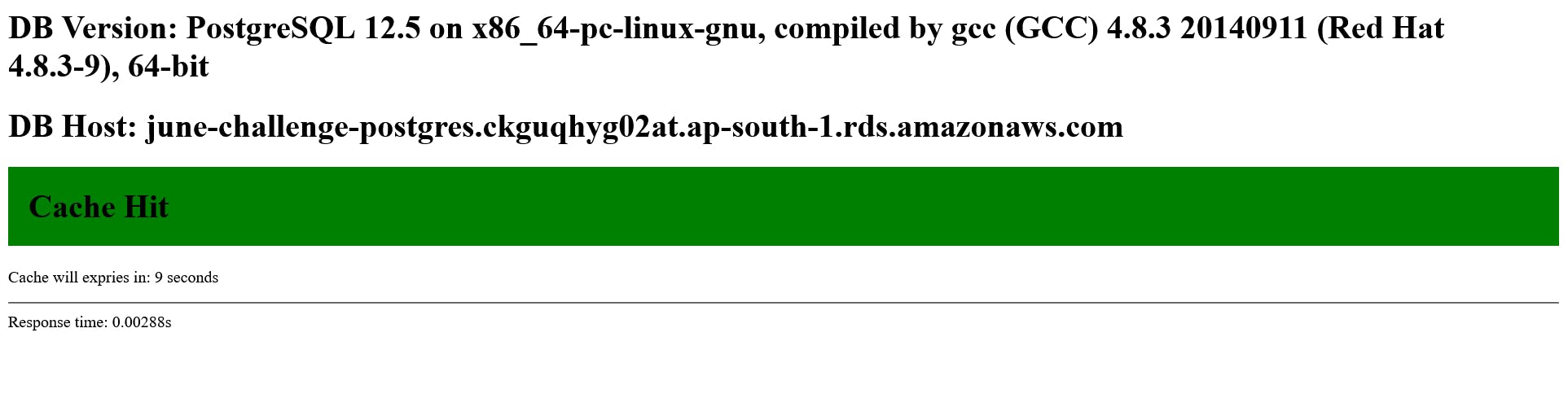

In case of a cache hit, the response is immediately served to the user from Redis memory instead of querying on Postgres. Hence, response time is reduced to a fraction of seconds.

You can find all the application as well as infrastructure code in my GitHub repository mentioned below. Please comment or DM me on social platforms for any feedback. 🥳

Thanks for reading!

Resources:

- Application Starter Code - https://github.com/ACloudGuru/elastic-cache-challenge

- My GitHub Repository - https://github.com/dwivediabhimanyu/elastic-cache-challenge

- LinkedIn - https://www.linkedin.com/in/abhimanyudwivedi

- Twitter - https://twitter.com/dvlprabhi

Learning Resources: